「Utilizing AI to Enhance Information Integrity」Citizens’ Deliberative Assembly

1. Assessment Results of Online Citizens’ Deliberative Assembly

i. Comprehensive Recommendations

The citizen group provided detailed opinions on the four main subtopics of discussion, reflecting diverse perspectives, showcasing deep reflections and concerns among digital professionals and citizen groups regarding AI ethics, social responsibility, and public governance amid the rapid development and application of AI technologies. Citizens extensively exchanged views on balancing enhanced self-regulation by major platform operators with government oversight, covering technical, regulatory, and educational aspects, reflecting civil society's expectations for improved AI regulation and a high regard for information integrity. Below are summarized three key issues, along with the integrated opinions of the citizen group:

- A. Enhanced Establishment of AI Regulatory Frameworks and Standards:

Digital practitioners expressed profound concerns about enhancing AI regulatory frameworks and establishing standards. Discussions among citizens reflected diverse perspectives regarding the tension between strengthening self-regulation by industry players or government oversight. On one hand, some citizens advocate for major platforms to enhance self-regulation based on community and public feedback to raise standards for information integrity. It's suggested that major platforms should develop corresponding regulations and standards based on industry norms and reference international rules to maintain market order and protect consumer rights. On the other hand, the citizen group believes that stronger government oversight is necessary to ensure responsible AI technology application and usage, especially concerning public interests and sensitive areas. It's recommended that governments establish strict standards and punitive measures, imposing hefty fines for AI abuse that undermines information integrity, emphasizing the importance of prevention over post-punishment. There's a call to suspend the use of AI systems found to compromise information integrity until they undergo review and improvement.

- B. Enhancing Large Platform Capabilities in Information Analysis and Recognition Using AI:

Digital practitioners and citizen groups advocate for large platforms to utilize AI in assisting citizens in analyzing emerging threats to information integrity, focusing on how AI technology can enhance information analysis and recognition to address challenges such as misinformation and information manipulation. The citizen group believes AI can serve as a tool to enhance citizens' ability to identify information integrity but also emphasizes the need to enhance public digital literacy. Additionally, the importance of preventive measures is emphasized, strongly recommending proactive measures through education, and raising user awareness and literacy to reduce reliance on post-remedial measures. The citizen group leans towards supporting the provision of unique anonymous digital IDs for each user; advocating for large platforms' generative AI to cite diverse data pipeline sources, primarily relying on peer-reviewed scientific information or mainstream news media reports.

- C. Establishment and Improvement of Evaluation Mechanisms:

The citizen group believes that clear evaluation mechanisms can help ensure that information sharing and dissemination on large platforms comply with integrity and accuracy standards, thereby reducing the circulation of errors and misinformation. Establishing evaluation mechanisms for AI products and systems ensures responsible AI technology usage by large platforms, helping to protect users from potential AI misuse and misconduct. Transparent evaluation processes and standards not only enhance platform credibility but also help build and maintain public trust in AI technology applications.

The citizen group is concerned about how to establish evaluation mechanisms for large platforms to assess the effectiveness of AI product and system message analysis and recognition tools and to supervise and evaluate their responsibility in AI technology usage. Considerations and opinions regarding related policy and mechanism design include developing new evaluation frameworks and projects, establishing AI technology and platform certification and trust rating systems, publicly disclosing and transparentizing regulatory and evaluation criteria, and expanding opportunities for user and citizen participation rather than relying solely on experts and scholars.

ii. Feedback on Policy Implications from Discussion Synthesis:

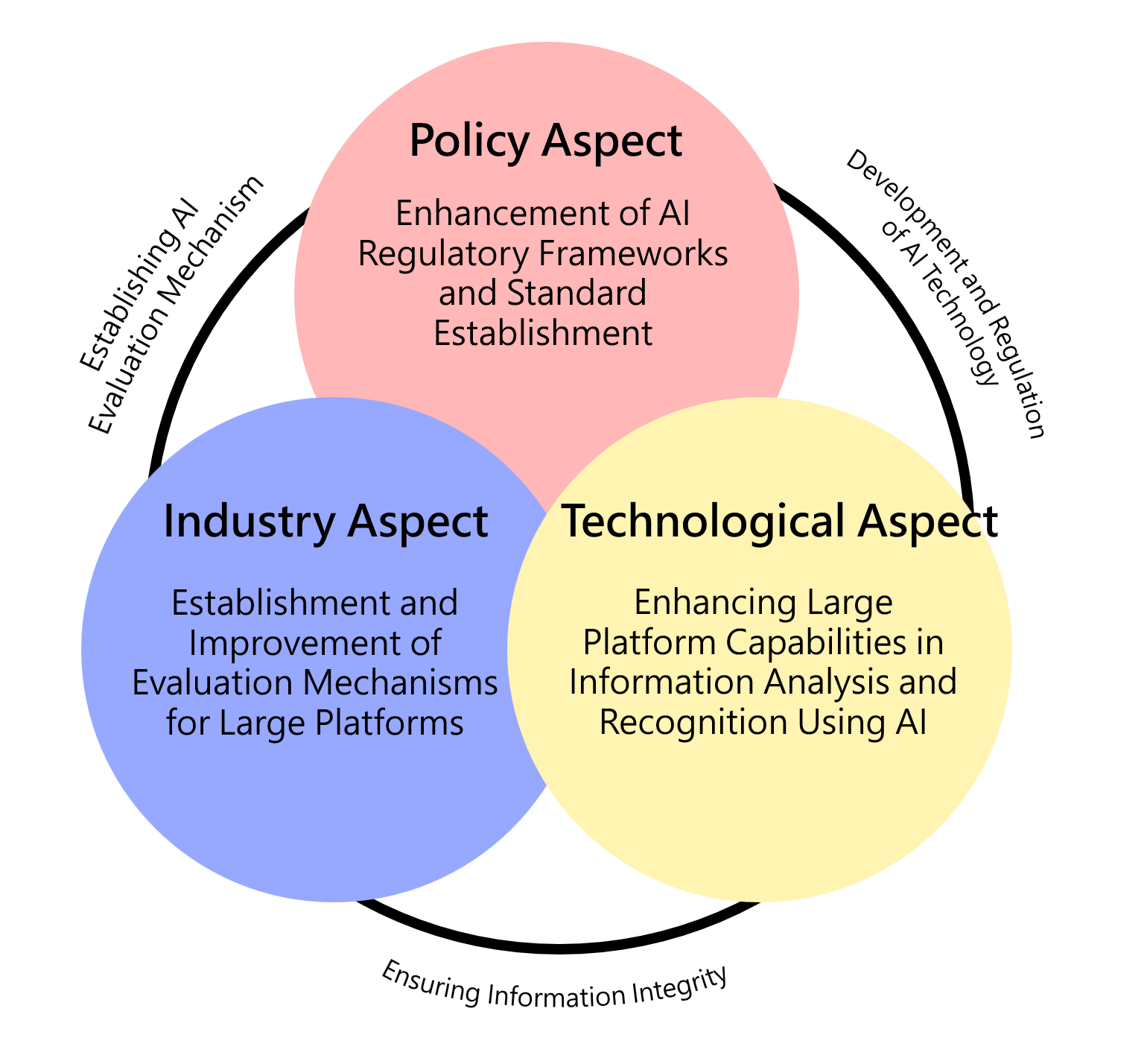

Through extensive discussions facilitated by citizen groups, a wide array of opinions was exchanged regarding the balance between enhancing self-regulation among large platform operators and government oversight. These discussions covered policy, industry, and technological aspects, reflecting civil society's expectations for improved AI regulation and a high regard for information integrity. Furthermore, citizen participation, including individuals and digital practitioners, adequately reflected the opinions of both the public and professionals, serving as a paradigm of public-private cooperation and providing a representative basis for the legitimacy of national governance and key reference. The following summarize and elaborate on the discussion results, along with illustrative diagrams:

- A. Policy Aspect: Enhancement of AI Regulatory Frameworks and Standard Establishment

Regarding policy formulation, the citizen group expressed diverse perspectives on enhancing the establishment of AI regulatory frameworks and standards. Firstly, citizens advocated for major platforms to enhance self-regulation based on community and public feedback to raise standards for information integrity. It's recommended that major platforms should develop corresponding regulations and standards based on industry norms and reference international rules to maintain market order and protect consumer rights. On the other hand, citizens believe stronger government oversight is necessary to ensure responsible AI technology application and usage, especially concerning public interests and sensitive areas. Citizens suggest that governments should establish strict standards and punitive measures, and advocate for the suspension of AI systems found to compromise information integrity until they undergo review and improvement.

- B. Industry Aspect: Establishment and Improvement of Evaluation Mechanisms for Large Platforms

On the industry regulatory level, the citizen group believes that clear evaluation mechanisms can help ensure that information sharing and dissemination on large platforms comply with integrity and accuracy standards, thereby reducing the circulation of errors and misinformation. Establishing evaluation mechanisms for AI products and systems ensures responsible AI technology usage by large platforms, helping to protect users from potential AI misuse and misconduct. Transparent evaluation processes and standards not only enhance platform credibility but also help build and maintain public trust in AI technology applications. The citizen group is also concerned about and anticipates participating in establishing evaluation mechanisms for large platforms to test the effectiveness of AI product and system message analysis and recognition tools, as well as to supervise and evaluate their responsibility in AI technology usage.

- C. Technological Aspect: Enhancing Large Platform Capabilities in Information Analysis and Recognition Using AI

At the application level of AI technology, the citizen group supports the use of AI by large platforms to assist citizens in analyzing emerging threats to information integrity, focusing on how to utilize AI technology to enhance information analysis and recognition to address challenges such as misinformation and information manipulation. The citizen group believes AI can serve as a tool to enhance citizens' ability to identify information integrity, advocating for large platforms' generative AI to cite diverse data pipeline sources when referencing data. It is suggested that scientific information peer-reviewed or mainstream news media reports should be prioritized. However, it is also emphasized that this requires collaboration with efforts to enhance public digital literacy.

2. summary

The Ministry of Digital Affairs (moda) is committed to promoting trustworthy AI and a digital trust environment. The "AI Product and System Evaluation Center" was established on December 6, 2023. To understand and advocate the importance of using AI to promote information integrity, the Administration for Digital Industries (ADI) conducted an online “Utilizing AI to Enhance Information Integrity” Online Citizens Deliberative Assembly on March 23, 2024, inviting experts, scholars, citizens, communities, and digital practitioners to discuss issues related to using AI to identify and analyze the integrity of information.

The team of the National Yang Ming Chiao Tung University Institute of Technology and Society, in collaboration with the Stanford University Center for Deliberative Democracy, organized the nationwide online deliberative assembly "Towards Responsible AI Innovation" in 2022 and "How to Achieve Net Zero Carbon by 2050?" in 2021. The deliberative survey model has been adopted in more than 50 countries worldwide. The Stanford Center for Deliberative Democracy is internationally renowned for its deliberative survey research and has been commissioned to conduct nationwide projects in Japan, South Korea, Mongolia, etc., and has collaborated with Meta to host global community forums. The research team is committed to promoting the United Nations' 17 Sustainable Development Goals (SDGs), particularly pursuing SDG 16 Peace, Justice, and Strong Institutions, and promoting SDG 17 Global Partnerships.

The "College of Humanities and Social Sciences" aims to develop outstanding research in humanities and social sciences. Based on the existing cooperation with the Stanford University Center for Deliberative Democracy, it continues to deepen international cooperation, expand democratic networks, and strengthen global partnerships.

AI technology continues to advance, bringing many benefits and conveniences to our lives. However, simple and easy-to-learn "generative AI" software such as ChatGPT, deepfake, or other AI drawing software also generate many false information, extremely realistic photos, and videos (such as forged celebrity press conference videos, face-swapped inappropriate photos, nonexistent government press releases, and documents), which are often disseminated alongside real news. However, people can also use AI to help us identify the source of information, analyze the authenticity of information, and its complete background. How to use AI to identify and evaluate the authenticity of messages and maintain information integrity is an important issue that everyone needs to pay attention to and discuss. The citizen review conference provides an open and equal discussion platform for citizens from all over the country to discuss how large platforms should use AI technology to improve "information integrity," and related considerations.

The two sessions of the review activities had four discussion subtopics:

- (1) Strengthening self-regulation of large platform operators or strengthening government supervision?

- (2) How should large platforms use AI to "analyze" emerging threats to information integrity?

- (3) How should large platforms use AI to help users "maintain" information integrity?

- (4) How should large platforms use AI to "enhance" information integrity standards?

Multiple policy options and action plans were designed for the four major discussion topics, allowing participating citizens to fully examine, weigh, and discuss the choices of different solutions, to help citizens clarify and consider the potential impacts of better AI technology and systems, and comprehensively weigh and discuss solutions or measures to solve problems.

Therefore, the National Yang Ming Chiao Tung University, commissioned by the moda, analyzed the views of the Taiwanese people on how to use AI to promote information integrity, as well as the attitudes, preferences, and positions on policy issues after deliberation and discussion, and the changes in the level of support for policy proposals, as well as the evaluation of citizens on the deliberation activities. The opinions of the citizens and relevant stakeholders after the discussion will help establish more comprehensive and balanced AI evaluation standards, ensuring that the development of AI meets diverse needs and tends towards more objective and fair standards.

- A. Definition of Participant Representativeness

This " Utilizing AI to Enhance Information Integrity?" adopts the deliberative polling model (Deliberative Polling®). Nearly 450 citizens from all over the country (including digital practitioners) were recruited through random sampling and gathered on the Stanford Online Platform to discuss how large platforms should use AI to promote information integrity.

To help citizens understand the introduction of generative AI and information integrity, on February 6th, the project held an Expert Advisory Committee meeting in the afternoon, inviting 7 scholars and experts to provide advice on this event and discuss the content of reading materials provided to the public, as well as recommended expert candidates for dialogue with the public.

For the recruitment of citizens for this citizen review conference, 200,000 text messages were randomly sent out thru the government 111 platform, and a total of 1760 valid samples were collected. Among the samples collected, there were 573 digital workers (accounting for 32.5% of the samples). Based on gender, age, and residence variables, samples were selected to match the national population ratio. Then, according to the National Development Council's National Digital Development Research Report for the year 2021, the digitalization rate of employees in Taiwan was 53.6%, meaning that 53.6% of employees need to use computers or the internet during work.

On March 23rd, a total of 447 citizens attended both sessions of the review conference in the afternoon, randomly assigned to 44 citizen review groups, including 12 groups of digital practitioners and 32 groups of general citizen groups.

- B. Scope of Discussion Topics

AI is a highly promising field. However, while companies pursue the convenience of AI applications, issues such as ethics, system reliability, and privacy security have become concerns that need to be addressed as applications progress rapidly. Therefore, by strengthening information integrity, we can establish a world based on rules, democracy, security, and digital inclusion, achieve a safe and trustworthy information ecosystem, and promote the positive development of AI applications. The focus of this citizen deliberative assembly was on how large platforms offering AI services can promote the principle of "information integrity." Through communication, understanding how citizens, community users, and digital practitioners should use AI to enhance information credibility, identify and analyze information contexts, and promote information integrity.

The discussions at the assembly focused on four major discussion subtopics: (1) strengthening self-regulation of large platform operators or enhancing government supervision; (2) how large platforms should use AI to help citizens analyze emerging threats to information integrity; (3) how large platforms should use AI to help users maintain information integrity; and (4) how large platforms should use AI to enhance information integrity standards. There were a total of 44 citizen groups participating in the review conference, and based on the questions raised during the discussions of the two sessions of citizen groups, ten major concerns were summarized, including transparency and citizen participation: whether AI regulatory audit criteria should be publicly disclosed in a transparent manner, whether citizen representatives should participate in the policy-making process, rather than relying solely on experts and scholars; penalty setting and enforcement: how to set AI-related penalties, including fine standards for operators and how to enforce these penalties against foreign companies; AI certification and grading system: how the government should establish an AI technology and platform certification system and credible level; regulatory technology and methods: what technologies and methods the government can adopt to regulate AI, especially for large platforms of multinational companies; self-regulation of operators and consumer protection: how to incentivize operators to self-regulate, and whether more consumer protection measures need to be established outside of government regulation and operator self-regulation; neutrality of evaluation and freedom of speech: how the government can ensure neutrality when evaluating AI technology, to avoid suppressing freedom of speech; regulatory strategy and implementation: how the government should design and implement AI-related regulatory mechanisms to quickly and accurately reflect the actual situation; severity of penalties and economic benefits: whether the fines set by the government are sufficiently severe to ensure that they do not lose effectiveness because they are lower than the profits from violations; government judgment of the truth of content: how the government judges the truth of AI-generated content and ensures the fairness and justice of such regulation; technological progress and government response: whether the government's technology and techniques can keep up with developments in AI technology and even be deployed in advance to respond to rapidly developing AI technologies.

- C. Tracking and Implementation of Policy Recommendations

In this assembly event, citizens engaged in discussions and exchanges, and the online platform also had mechanisms to facilitate citizen discussions and provide well-designed deliberation scenarios and opportunities for fair discussions, including system automation to manage speaking time for citizen groups, providing speaking orders, gently encouraging fewer vocal participants, and providing real-time transcripts and records. Finally, the decision-making process can be finalized through voting or other forms of decision-making procedures to determine the actions or policies to be taken.

The advantages of online deliberative democracy activities include promoting citizen participation. Since the activities are conducted online, people can participate more easily without being limited by time and location, thereby expanding the range of participants. Furthermore, deliberative democracy increases policy transparency, as the discussion and decision-making processes can be recorded and open to all participants, helping to increase the transparency and openness of the policy-making process. Lastly, since the activities are conducted online, it is more convenient to encourage citizens to speak up, collect opinions, hold discussions, and make decisions, thus improving the efficiency of decision-making. Online deliberative democracy activities provide a convenient and effective platform for more people to participate in political discussions and decision-making, thereby promoting democratic participation and political transparency.

The citizen opinions generated from this citizen review event, in response to public expectations, were promptly discussed by international giants on issues such as strengthening platform analysis and identification mechanisms, and submitting language models (LLMs) to AI evaluation center (AIEC) on April 17th. The participating international giants all agreed that AI must possess important characteristics such as trustworthiness, accuracy, and security. They pointed out that during the development of language models, their teams are responsible for continuous testing and training adjustments to their models to meet the expectations of reliable AI systems. They also expressed a positive attitude towards providing their developing language models for evaluation by the AIEC. In addition, regarding the analysis and identification of generative AI content, the international giants presented relevant practices that have been established or are about to be introduced during the meeting, including AI-generated content detection technologies such as SynthID, traceability labeling and signing, user reporting mechanisms, and reminders to users to pay attention to AI-generated content.

This event, by collecting and summarizing the opinions of the public and international AI giants, will also correspond to the recently proposed draft of the Fraudulent Crime Harm Prevention Act (Anti-Fraud Act) by the Executive Yuan. It imposes additional responsibilities on online advertising platforms, covering the requirement that advertisements published or broadcasted by them may not contain content related to fraud, to strengthen the AI analysis and identification capabilities. Furthermore, if advertisements published or broadcasted by online advertising platforms contain personal images generated using deepfake technology or artificial intelligence, it should be clearly disclosed in the advertisement, in line with public expectations. It is hoped that all businesses can effectively comply with regulations to safeguard most ethical internet users.

3. Methods

Participants were recruited through random text message invitations sent to the public. At least 400 respondents willing to participate were selected using a stratified random sampling method. Samples were chosen based on variables such as residence, gender, and age to match the population ratios of Taiwan (according to population data from the Ministry of the Interior (MOI)). Additionally, considering that 53.6% of the workforce in Taiwan requires computer or internet access during work hours (National Development Council, 2021), approximately half of the participants were selected to represent individuals who require computer or internet access during work.

Furthermore, purposive sampling was conducted to recruit digital industry professionals (approximately 50 individuals, divided into 5 groups). The same questionnaire survey link was sent to digital and news media companies registered with the MOI, or through online social networks. Digital industry professionals who expressed willingness to participate were assigned to separate group discussions (with discussion topics like those of the public groups). The sampling plan outlined above is provisional, and the actual sampling proportions will be adjusted based on response rates and discussions with the sponsoring entity.

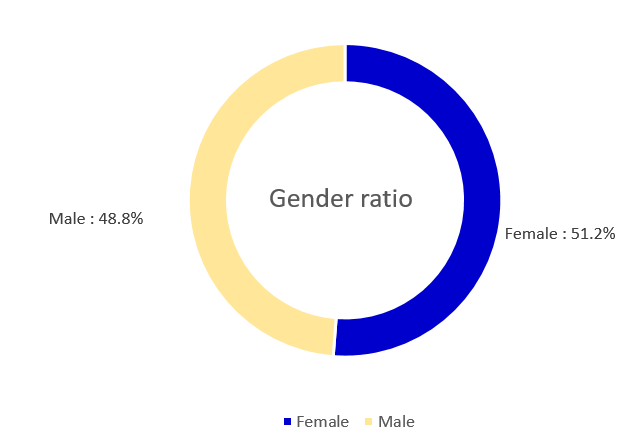

The survey was sampled according to the population proportions released by the MOI in January 113, and was weighted using Iterative Proportional Fitting (IPF) or ranking. After weighting, the data were checked to ensure that there were no significant differences between each demographic variable and the population. The weighting process involved conducting chi-square tests on the gender, age, and regional proportions of the sample and population data, with the population data stratified according to the levels established during sampling. There was a total of 436 participants in this session, with a gender ratio of 233 males (51.2%) and 213 females (48.8%).

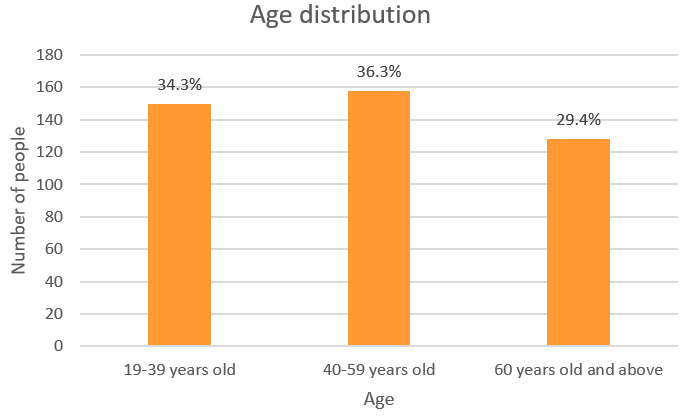

In terms of age distribution, most participants fell within the 40-59 age range, accounting for approximately 36.3%. Following that, the 19-39 age group accounted for about 34.3%, while those aged 60 and above comprised 29.4% of the participants. This indicates that most participants in this discussion belonged to the younger and middle-aged generations, with fewer participants aged 60 and above showing interest in participating in online deliberative assembly compared to other age groups. The likely reasons for this could be related to the relevance of the topics discussed, professional thresholds, levels of social engagement, and familiarity with online meeting operations. Please refer to the chart below for visual representation:

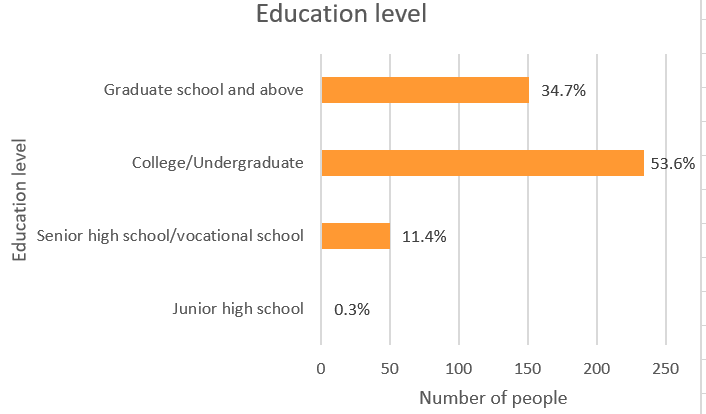

In terms of educational attainment among participants, the majority held a college degree or higher, accounting for approximately 53.6%. Following that, those with graduate degrees or above comprised 34.7% of the participants, while those with high school, vocational school, or junior high school education accounted for 11.7%. This indicates that the discussion primarily involved participants with a college education or higher. Please refer to the chart below for visual representation:

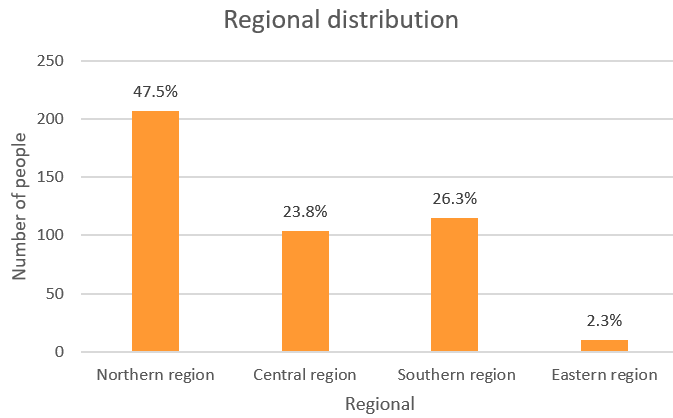

In terms of regional distribution among participating citizens, the majority were from the Northern region, with a total of 207 participants, accounting for 47.5% of the total. The Southern region followed with a percentage of 26.3%, the Central region with 23.8%, and finally the Eastern region with 2.3%. Although samples were drawn from the Kinmen and Matsu region, unfortunately, there was no participation from residents of these areas. Therefore, during weighting, if the P-value is less than 0.05, indicating a significant difference, the percentage originally allocated to the Kinmen and Matsu region will be proportionally redistributed to the other four regions.

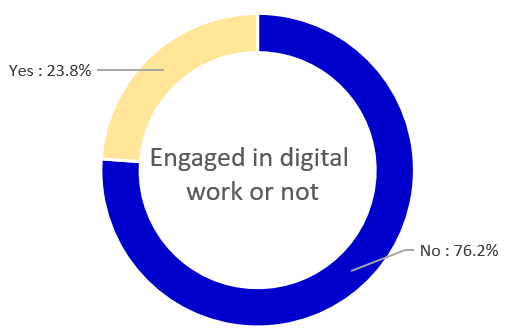

In terms of the distribution of digital workers among participants, there were 104 participants engaged in digital work, accounting for 23.8% of the total. The majority, consisting of 332 participants, were non-digital practitioners, representing 76.2% of the total.

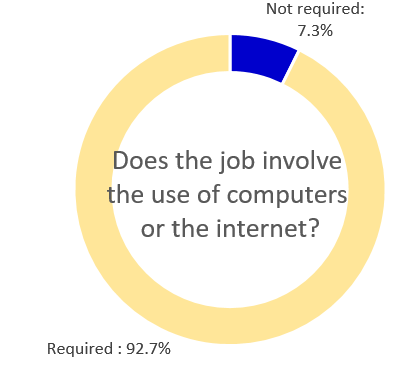

The survey for this project investigated whether participants needed to use a computer or the internet for work. Among those who indicated a need, there were 404 participants, accounting for 92.7% of the total. Those who did not require the use of a computer or the internet represented 7.3%.

The Yang Ming Chiao Tung University of Science and Technology, in collaboration with the Stanford Online Platform, recruited 436 citizens nationwide through random sampling via SMS using the government's 111 SMS platform. Additionally, purposive sampling was employed to recruit digital practitioners, totaling approximately 50 individuals across 5 groups. These participants convened on the Stanford Online Platform to discuss how large platforms should utilize AI to promote information integrity.

The Citizens' Deliberation Assembly provided participants with comprehensive policy issue information. Through deliberation in citizen groups and expert dialogues, issues were clarified, and the pros and cons of policy proposals were examined in depth. This process generated policy opinions from citizens and digital workers regarding AI evaluation systems and how AI can be used to enhance information integrity. These opinions can serve as references for relevant authorities in formulating AI regulations and standards.

The project team drafted and edited issue manuals, which were reviewed and advised upon by AI and related domain scholars and experts. These manuals provided basic information for citizen participants entering discussions, covering an introduction to generative AI and information integrity. They also presented balanced statements on the two discussion topics of the conference sessions and various arguments for and against each proposal, aiding citizens in understanding, contemplating, and participating in discussions.

On February 6th, an expert advisory committee meeting was held, inviting 7 scholar experts to provide consultation on the event and discuss the content of reading materials provided to the public, as well as recommend expert candidates for dialogue with the public.

The collaboration between Yang Ming Chiao Tung University of Science and Technology and the Stanford Online Platform facilitated structured and fair dialogue opportunities through an online platform designed for group discussions. This platform, developed by the Stanford university's Deliberative Democracy Center and Crowdsource Democracy team, randomly assigned participants to online citizen deliberation groups of 10 people. Throughout the process, the platform provided information on various opinions on policy issues to facilitate structured discussions. Its features include time management for speeches, issue management, prevention of inappropriate language, assistance for fair participation, display of discussion agenda and issue information, subtitle buttons, real-time recording of speech content, and visualization of speech situations, transcending time and expanding the scale of participation.

The following briefly outlines the conceptual design and operational plan for this activity:

Two short introductory videos (approximately 4.5 minutes each) were produced to be played before discussions commenced in citizen groups, aiming to stimulate citizens' ideas and thoughts on the proposals outlined in the issue manual and focus the discussions. The videos primarily conveyed how AI technology, while continuously advancing and bringing many benefits and conveniences to our lives, also generates a lot of misinformation and extremely realistic photos and videos through simple and easy-to-learn "generative AI" software like ChatGPT, deepfakes, or other AI drawing software. These contents are often disseminated alongside genuine news. However, people can also use AI to help us identify the source of information and analyze its authenticity and complete background. Through discussion, this activity explored how to utilize AI to identify and evaluate the authenticity of information and maintain information integrity.

The two deliberation sessions consisted of four discussion subtopics:

- Enhancing self-regulation by large platform operators or strengthening government oversight?

- How should large platforms use AI to help citizens "analyze" emerging threats to information integrity?

- How should large platforms use AI to help users "maintain" information integrity?

- How should large platforms utilize AI to "enhance" information integrity standards?

During online deliberations, relevant information on the discussion topics was available on the platform for participants to review and engage in structured discussions at any time. After thorough discussion and deliberation by citizen groups, two questions deemed most important were raised for experts during the main expert dialogue session. This session included responses from eight scholars and experts from interdisciplinary fields such as computer science, AI law, humanities and social sciences, communication technology, computational linguistics, among others. Following the deliberation, a post-test survey was conducted to understand participants' attitude changes and knowledge on policy issues, as well as to gather feedback on the activity.

For the recruitment of citizens in this civic deliberation assembly, 200,000 government 111 text messages were randomly sent out, resulting in a total of 1,760 valid responses. Among the 1,760 valid responses, there were 573 responses from digital practitioners, accounting for 32.5% of the sample. The project selected samples according to gender, age, and residential area variables to match the national population ratios. Subsequently, based on the National Digital Development Research Report for the year 20211, which indicated that 53.6% of employees require computer or internet usage for work, a sample representing 53.6% of those who need computers or the internet during work hours was selected.

On March 23, a total of 447 citizens attended both sessions of the deliberation assembly throughout the afternoon, randomly distributed among 44 citizen deliberation groups, including 12 groups of digital practitioners and 32 groups of general citizens. The survey results showed that citizens perceived the discussion topics to be more complex than they originally thought and gained a better understanding of the challenges facing AI technology development through deliberation. Attitudes towards policy proposals tended to be cautious rather than extreme after deliberation. 85% of citizens believed that large platforms should enhance self-regulation and take primary responsibility for improving information integrity, recognizing the risks posed by generative AI technologies. Up to 89% of citizens supported the execution of new technologies by large platforms to detect whether specific content on the platform was generated by AI, showing a 2.6% increase after deliberation. Support for the proposal to increase the evaluation criteria for information integrity in AI products and systems slightly increased, with 87.9% of citizens expressing support. The quiz scores of citizens increased after deliberation, and overall, citizens positively evaluated the civic deliberation assembly.

This survey utilized paired sample t-tests to analyze changes in attitudes towards various issues after deliberation. Each question in the questionnaire (except for knowledge questions) was rated on a scale of 0-10, with 10 indicating the most positive or supportive answer, and 0 indicating the most negative or opposing answer. The average scores before and after the deliberation were calculated and subjected to paired sample t-tests. Additionally, the original scores ranging from 0-10 were reclassified into percentages indicating disagreement or non-support (0-4 points), neutral (5 points), and agreement or support (6-10 points) to present the distribution of samples. Each correct answer scored 1 point, while incorrect or unknown answers scored 0 points. The sum of scores before and after the test was subjected to paired sample t-tests for analysis.

3.4.1 Perception of the Positive Impact of AI on Society and Individuals

The agreement percentages for the following three aspects have shown an upward trend:

- Positive Impact on Your Daily Life: The agreement percentage in the post-test is 76.5%, which increased by 9.3 percentage points.

- Your Work Environment: In the post-test, the agreement percentage is 71.3%, representing an increase of 11.5 percentage points.

- Your Personal Life: The agreement percentage in the post-test is 72.2%, showing an increase of 14.6 percentage points.

3.4.2 Perception of the Negative Impact of AI on Society and Individuals

The trend of increasing agreement is observed across three aspects:

- In daily life, the agreement rate in the post-test is 34.9%, an increase of 18.8 percentage points.

- In your work environment, the agreement rate in the post-test is 29.2%, an increase of 11.9 percentage points.

- In your personal life, the agreement rate in the post-test is 28.8%, an increase of 11.9 percentage points.

3.4.3 Enhance self-regulation of large platform operators, or strengthen government supervision?

After deliberation, there remains a high proportion of eighty-five percent agreement on whether to strengthen self-regulation of large platform operators or to enhance government supervision:

- Large platforms should bear primary responsibility for enhancing information integrity, identifying risks associated with generative AI technologies. The post-test agreement proportion is 85.3%, a decrease of 3.2 percentage points.

- The government should bear primary responsibility for enhancing information integrity, identifying risks associated with generative AI technologies. The post-test agreement proportion is 86.2%, a decrease of 1.0 percentage points.

- The government should impose substantial fines for large platforms that harm information integrity through erroneous or abusive use of AI. The post-test agreement proportion is 85.5%, a decrease of 4.4 percentage points.

- The government should require specific AI systems or algorithms found to violate information integrity standards to suspend use until they undergo review and corrective measures. The post-test agreement proportion is 83.5%, a decrease of 6.9 percentage points.

3.4.4 Large platforms should leverage AI to assist users in analyzing emerging threats to information integrity

- Up to eighty-nine percent of citizens agree that large platforms should implement new technologies to detect whether certain content on the platform is generated by AI, an increase of 2.6 percentage points.

- Large platforms should require users to provide labels or watermarks to alert viewers which information and videos are AI-generated. The post-test agreement proportion is 89.7%, a decrease of 2.5 percentage points.

- Large platforms should automatically detect if some posts contain AI-generated content. If so, they should label and inform viewers that these posts may contain AI-generated content. The post-test agreement proportion is 88.4%, a decrease of 4.4 percentage points.

- Large platforms should notify users who have encountered misinformation and provide them with correct information for correction. The post-test agreement proportion is 82.1%, a decrease of 6.7 percentage points.

- Large platforms should use AI to detect users who are frequently exposed to misinformation and provide training courses for identifying misinformation. The post-test agreement proportion is 75.0%.

3.4.5 How should large platforms utilize AI to assist users in maintaining information integrity?

For the evaluation center of AI products and systems, there should be an increase in evaluation criteria for information integrity, testing whether AI language models meet the standards. 87.9% of citizens agreed, with the average score increasing from 8.14 to 8.19.

- For the evaluation center of AI products and systems, there should be an increase in evaluation criteria for information integrity, testing whether AI language models meet the standards. 87.9% of citizens agreed, with the average score increasing from 8.14 to 8.19.

- When providing information, large platforms' generative AI should utilize diverse sources from information channels, with the agreement rate in the post-test at 90.1%, decreasing by 2.7 percentage points.

- When referencing sources, the primary information source for large platforms' generative AI should be internationally accredited information, with the agreement rate in the post-test at 83.7%, decreasing by 2.9 percentage points.

- Large platforms should temporarily suspend users found to severely violate information integrity standards until they undergo review and corrective actions, with the agreement rate in the post-test at 86.9%, decreasing by 4.9 percentage points.

3.4.6 How should large platforms better utilize AI to enhance information integrity standards?

All four aspects showed a decreasing trend in agreement after retesting:

- Large platforms should be obliged to transparently disclose the AI algorithms used for content and data analysis. Post-test agreement percentage decreased by 27.5 percentage points to 55.6%.

- Large platforms should provide educational segments within dynamic messages containing preventative content, teaching users how to identify message sources or AI-generated content. Post-test agreement percentage decreased by 9.3 percentage points to 82.7%.

- Large platforms should establish a low-threshold channel, allowing users to easily report content or appeal received reports. Post-test agreement percentage decreased by 8.4 percentage points to 81.9%.

- The fact-checking mechanism should be overseen and evaluated by an independent, representative civic oversight group. Post-test agreement percentage decreased by 4.3 percentage points to 79.4%.

3.4.7 The Importance of Personal Opinions

Both aspects show an upward trend:

- The agreement percentage regarding the importance of my opinion in my community in the post-test is 60.8%, rising by 17.3 percentage points.

- The agreement percentage regarding the importance of my opinion on social media in the post-test is 55.9%, rising by 12.0 percentage points.

3.4.8 Trust Levels Towards Various Entities

- Government Authority (moda): Post-test agreement rate is 68.2%, increased by 9.2%.

- Scholars and Experts: Post-test agreement rate is 71.2%, increased by 9.8%.

- Individuals in Community Life: Post-test agreement rate is 48.4%, increased by 8.2%.

3.4.9 Increase Knowledge

- The average score in the pre-test was 3.4774, while in the post-test, it rose to 3.9711, indicating an increase of approximately 0.5 correct answers per person. This suggests that participants were more capable of answering questions correctly after the session.

3.4.10 Citizen evaluations after the event

The evaluation of the event by respondents is generally positive, with average scores ranging from 7.01 to 8.11:

- The average score for group discussions is 7.01.

- The average score for the introductory handbook provided by the organizers is 8.00.

- The average score for the large-scale discussion between experts and citizens is also 8.00.

- Overall, the event received an average score of 8.00.

Overall, the respondents' attitudes towards the discussion platform are generally positive, with most average scores falling between 7.04 and 8.47:

- The online deliberation platform provided an opportunity for everyone to participate in discussions, with an average score of 8.47.

- In group discussions, each member participated relatively equally, with an average score of 8.12.

- The discussion platform ensured that opposing views were considered as much as possible, with an average score of 7.56.

- In group discussions, important aspects of the topics were covered, with an average score of 7.04.

Respondents' attitudes towards other aspects of the conference are also positive, with average scores ranging from 7.62 to 8.50:

- Learning a lot from participants with different opinions and their life experiences received an average score of 7.62.

- Understanding more about others' views on the topic after the discussion received an average score of 7.98.

- Gaining different perspectives, knowledge, and experiences from other participants received an average score of 7.83.

- Learning more arguments and opinions about the issue to refine personal opinions received an average score of 7.99.

- Considering the discussion process important received an average score of 7.90.

- Expressing a desire for the deliberative event to proceed as scheduled, even if unable to attend, received an average score of 8.43.

- Identifying and discussing very important issues during the deliberation process received an average score of 8.50.

Respondents' attitudes towards the conference's impact on individuals are generally positive, with average scores ranging from 6.22 to 7.56; and towards enhancing understanding of the discussion topics are also positive, with average scores ranging from 7.58 to 9.52.

Regarding the impact of the conference on individuals' attitudes, after participating in the activities and discussions, the average score for whether knowledge about AI has increased is 7.56.

- The average score for the change in personal attitudes and positions regarding AI issues compared to before participating is 6.22.

- Understanding what generative AI is received an average score of 7.58.

- Understanding how large platform companies utilize AI technology to promote information integrity received an average score of 7.61.

- Understanding the challenges faced by AI technological innovation development received an average score of 9.52

4. Conclusion and Recommendations

To promote the moda's efforts to advance trustworthy AI and a digital trust environment and to raise awareness of the importance of using AI to enhance information integrity, discussions were held on related topics through the online " Utilizing AI to Enhance Information Integrity" citizens’ deliberative assembly involving experts, scholars, citizens, communities, and digital practitioners.

This event generated three major innovations to assist in policy promotion. Due to the success of this event and the support from the public, similar activities will continue to be organized in the future to encourage the public to provide more policy suggestions based on their needs and perspectives.

- A. Building Trust among the Public through The 111 Government SMS Platform:

Utilizing the 111 Government SMS Platform to establish a reliable channel for government information delivery enhances public confidence in government information. By employing a publicly transparent random sampling method, diversity in societal participation is achieved. The moda encourages public participation through the 111 Government SMS Platform.

- B. Addressing Public Concerns through Immediate Discussion:

Recognizing the importance of AI possessing characteristics of trustworthiness, accuracy, and security, international corporations are strengthening platform analysis and identification mechanisms. This includes established or forthcoming practices such as SynthID and other AI-generated content detection technologies, tracing labeling and signatures, user reporting mechanisms, and alerts for AI-generated content. Corporations hold a positive attitude towards providing their language models for evaluation by AIEC.

- C. Advocating for Legislation based on Public Feedback:

By collecting and compiling feedback from the public and international AI corporations, it aligns with the recent release of the Executive Yuan's draft Anti-Fraud Legislation (Fraud Prevention Act). The draft legislation stipulates that advertisements published or broadcasted should not contain fraudulent content. This aims to strengthen the ability of AI to analyze and identify information. If an advertisement contains personal images generated using deepfake technology or artificial intelligence, it should be clearly disclosed in the advertisement to meet public expectations.